In our work, we often need to implement video streaming from iOS devices in real-time or near real-time. A common example is the use of iOS devices as surveillance cameras or creation of streaming applications like Periscope. Problem statement often imposes additional requirements like enabling of stream playback on another device or running the app smoothly in the browser or VLC player, low latency (video streaming in near real-time), low resource consumption (long battery life), no need in a dedicated media server, etc.

Category Archives: Online Video

How to make a great app preview video

If you are reading this then you most likely know that Apple released a new version of iOS 8 which allows app developers to publish short 15-30 seconds preview videos of their apps. As you know, the App Store is very crowded so making a great app preview video is something you’ll want to do as soon as possible!

In this article I will guide you through the process of making an app preview video as well as share some lessons I learnt while making a video for the Together app using Adobe Premiere Pro CC. The process took almost a whole day and I’m pretty sure that this post will save you a lot of time and make your video better! Continue reading

Video Delivery Analytics with Google Analytics

Many startups and new initiatives feature high quality videos related to their business. Quite often those startups want to optimize user experience with their content. Having such a great demand that keeps growing has caused many cloud infrastructure providers to appear on the horizon. In this article, I provide our story of choosing the best infrastructure providers for our projects across the world. Our secret is in measuring everything and making data driven decisions as there seem to be no silver bullets around. Continue reading

Doğan TV Holding deploys DENIVIP Video Load Balancer

Moscow, Russia – January 20, 2014 – DENIVIP Media, a leading provider of video load balancing technologies, today announced the deployment of Intelligent Video Load Balancing system for Doğan TV Holding. The tightly integrated solution, delivered by DENIVIP Media enables the leading digital entertainment service of Turkey – NETD – http://www.netd.com – to efficiently use in-house infrastructure, manage third party CDN providers and assure the best quality of content delivery to its users.

Video content delivery is the cornerstone of any Internet broadcasting service. It impacts the most important aspects of quality of user experience as well as broadcaster expenditures. The bigger the broadcaster the more important being effective in video content delivery. DENIVIP Video Load Balancer solves both issues, it lets broadcasters to deliver a particular content the most effective way to a particular viewer and the same time make it less expensive way choosing over in-house infrastructure and CDN providers.

One of the most important features of DENIVIP Video Load Balancer is the ability to route a user’s video player to the delivery point where the requested content is already cached. So called, cache aware load balancing is very important when you need to minimize the delay between play button called and the real playback begins.

Doğan Media Group experiences solid fluctuations of video load during the time and it was very important to implement a proper handling for possible significant overloads during special events or some viral demand growth. DENIVIP Video Load Balancer helps to distribute traffic not only among internal infrastructure but a set of external CDN providers, making it simple to offload usage peaks to external CDN providers.

“We were mastering intelligence of video load distribution over unstable public internet segments and broad geography for more than 5 years. I’m thrilled that our technology is serving millions of Internet video viewers in Turkey and beyond.” said Denis Bulichenko, CEO of DENIVIP Media.

“We were looking for a very sophisticated solution meeting our needs with a reasonable licensing terms. It was a pleasure to discuss best practices of video load distribution and adopt the best ones in our project.” said Ziya Ozgur, OTT Headend Manager.

About Doğan TV Holding

Doğan Yayın Holding (DYH) is active in a wide range of fields including newspaper, magazine and book publishing, television and radio broadcasting and production, as well as the Internet, digital world, print and distribution. Content providers of the Group include newspapers, magazines, publishing houses, television channels, radio stations, as well as music and production companies. The Group’s service providers are made up of distribution, production, digital platform, news agency, Internet and printing companies, as well as a factoring company. For more information, please visit http://www.doganholding.com.tr.

About DENIVIP Media

DENIVIP Media is the leading supplier of scalable solutions for multiscreen content delivery. Founded in 2008 and headquartered in Moscow, Russia, the company pioneered the use of intelligent software load balancers to power video content delivery over IP networks. Providing unmatched solutions for leading media companies worldwide, DENIVIP Media helps pay TV operators, content programmers, film studios and sports broadcasters bring video to any screen the most effective way. To learn more, please visit www.denivip.ru or blog.denivip.ru and follow @DENIVIPMedia on Twitter.

Making a Distributed Storage System

Hardly any project today can avoid storing of a large amount of media objects (video chunks, photos, music, etc).

In our projects, we often needed our storage system to be highly reliable, as loss of content often results in service interruption (I think this is true for most of the projects). Moreover, as the service capacity grows, such characteristics as performance, scalability, manageability, etc., become of key importance.

To enable content storage, you can use different distributed file systems, however each of them obviously has its own upsides and downsides. So selection of an optimum file system is in no way a trivial task. Recently, we have been solving such a task for our Together project, an innovative mobile video content platform to handle user-generated content just like Netflix handles movies. Then, we have started using the platform in other our services, such as: PhotoSuerte to store photos and Veranda to store short videos. In most of our projects, the platform has proven itself efficient. In this post, we would like to tell you how to create a distributed data storage for a video platform. Continue reading

Alternative Audio Tracks in HTML5 Video

A major advantage of HTTP Live Streaming, and it is still unbeaten by any other standard, is that you can use any technology to compose multimedia content. You don’t need expensive media servers to handle media streams. You can easily delegate media composition to some simple PHP / Python / Ruby / Node.js modules. As a long-time Flash lover I was somewhat prejudiced against the Apple’s video delivery standard. But as a developer, every day I use it to solve tasks which have been almost unattainable with the previous stack (or required expensive software). Adobe HDS playlists have been really hard to deal with. Just think of binary data in f4m playlists. They require much more time to develop & debug the solution. MPEG DASH is also far from being intuitive.

In this post, we are going to discuss how to make an alternative audio track for your video. Although HTTP Live Streaming can streamline this task, yet there are some limitations, so you need to make certain hacks on the client side. In our Together project, we had to implement alternate sound tracks for user videos. Luckily, we use HTTP Live Streaming throughout the system. Continue reading

Playlist Player for iOS

A player is a core part of your video application. If you ever have tried to build a player into your application, you probably know that you need some time to set up and customize it. We would venture to say, that mostly you need a player to run playlists rather than individual videos. In this post, we would like to tell you about our open source component we use to play back videos in our Together project. Please welcome to our playlist player, DVPlaylistPlayer. Continue reading

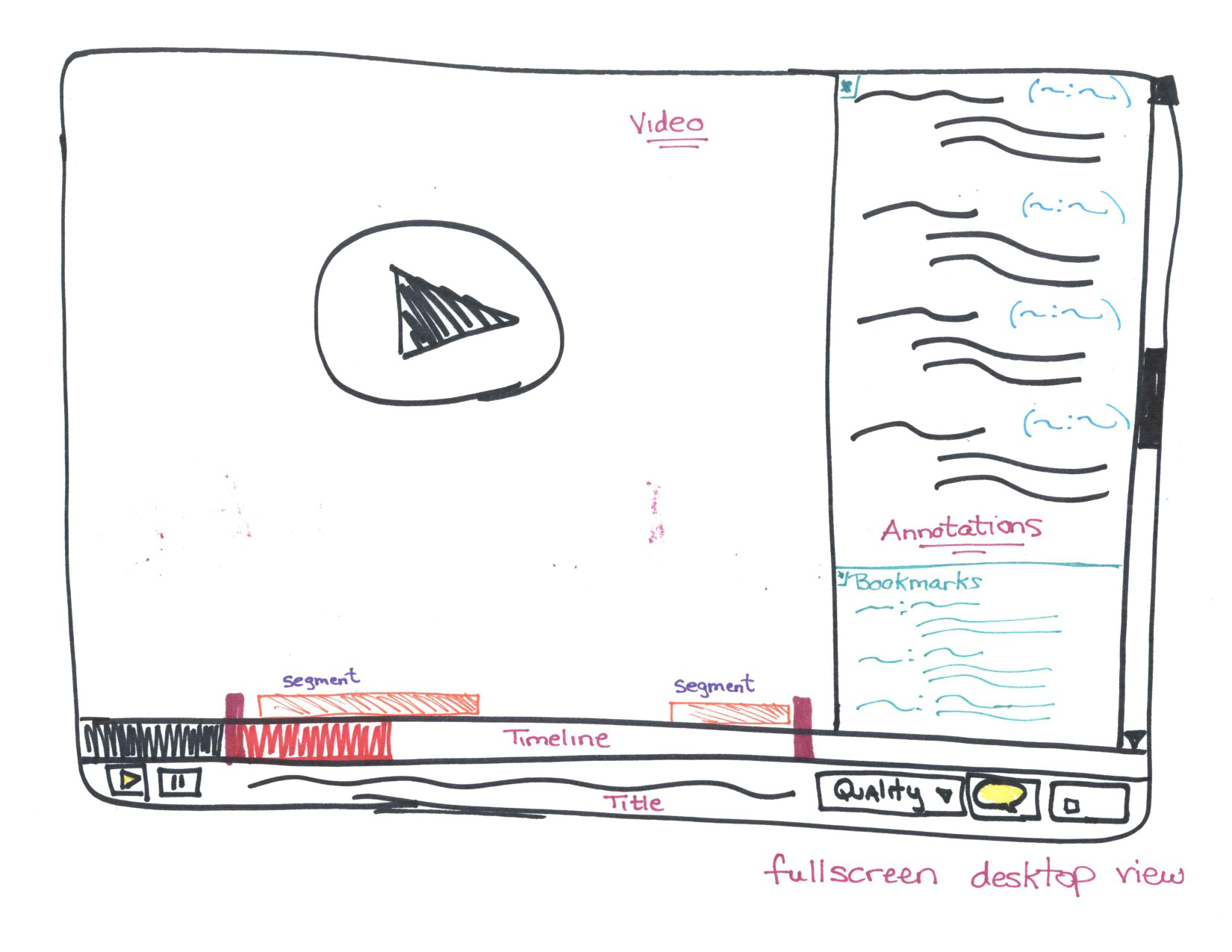

How to Design a Video Platform

In this post, we are going to dwell on the process of video platform design for our Together project. From the technical viewpoint, this project is remarkable by containing the entire content lifecycle, from its creation on mobile devices to distribution and viewing. While designing the platform, we sought to attain solution flexibility and cost-efficiency. With the new video platform you can receive, store and share videos. All video management tasks were implemented on Apple HLS. Continue reading

OSMF HLS Plugin

Having been involved in the Together project, I was assigned a task to enable Apple HLS video playback on the Flash platform. Video content delivery in a single format (HLS in this case) is usually very easy and offers many benefits. To process video, Flash has an open source OSMF framework that can be easily enhanced with various plugins. But there is one problem: the framework is absolutely HLS-agnostic. Adobe promoted RTMP first, and only then offered HTTP Dynamic Streaming (HDS) as an alternative to Apple HLS. In this post, we’ll cover a free HLS plugin that we have developed to run HLS in OSMF-enabled video players. Continue reading

ConnectedTV: Applications for Philips NetTV

In this post, we are going to discuss development of applications for Philips NetTV. Continue reading