This post is also available in: Russian

In our work, we often need to implement video streaming from iOS devices in real-time or near real-time. A common example is the use of iOS devices as surveillance cameras or creation of streaming applications like Periscope. Problem statement often imposes additional requirements like enabling of stream playback on another device or running the app smoothly in the browser or VLC player, low latency (video streaming in near real-time), low resource consumption (long battery life), no need in a dedicated media server, etc.

Such a problem in itself is not new, but no straightforward solution has been found for it yet. Putting it more accurately, there is a whole range of possible solutions:

- HTTP Live Streaing (HLS)

- HDS

- MPEG-DASH

- fragmented MP4 (fMP4)

- RTSP

- WebRTC

- etc.

Each approach has its benefits and downsides. For example, in our past but relevant post How to Live Stream Video as You Shoot It in iOS, we wrote about on-device HLS stream generation. It allows you to do without a media server and immediately download content to the CDN (e.g., Amazon S3 + CloudFront). But as such an HLS based approach has its inherent flaws (see below), this time we would like to discuss some better options: on-device generation of an fMP4 flow and RTP streaming based on a local RTSP server.

HTTP Live Streaming

HLS emerged in 2009 and quickly became relatively popular. This was facilitated by full support for HLS in the Apple ecosystem and its pretty clear structure: there is a master index file (or playlist) containing a list of links to video stream segments (or chunks) continuously added during online streaming. In HLS you also can define multiple alternate video streams for clients with alternate bitrate requirements (low/high bandwidth, etc.). However, such a two-tier system with the need to update the playlist has become a limitation to HLS use. HLS has shown poor performance in real-time broadcasts due to the following issues:

- The stream is cut into small chunks (several seconds each, as recommended); hence, inherent latency might be too high for real-time.

- However, a standard guideline for video players is to buffer a minimum of 3 chunks. Hence, the latency of item 1 would be tripled at the player level;

- Moreover, for the player to learn of new chunks in the stream the player has to continually re-fetch the index file; this is another source of latency (otherwise the player would be simply unaware of what to show next).

- Each chunk is an MPEG-TS file having substantial overhead to the core media content.

An obvious step to reduce latency in HLS would be to reduce the chunk size to the minimum (1 second). However, with such small file sizes the stream becomes prone to network instability. Hence, under the average “natural” conditions it is almost impossible to achieve smooth playback: more player resources are allocated for re-querying the master file and next chunk than for video display itself.

To resolve such issues, similar approaches were offered in 2011-2012: MPEG – by MPEG-DASH, Smooth Streaming – by Microsoft, and HDS – by Adobe. However, none of them became the “de-facto standard” (although of those three MPEG-DASH is a full-scale ISO standard), as they had the similar underlying shortcomings and, moreover, the Adobe’s and Microsoft’s solutions necessitated a special server-side support. Here you can find the table comparing these formats.

Fragmented MP4

As the time went on, another approach became popular for simple broadcasts, fMP4 (fragmented MP4). It was a minor (but essential) expansion to MP4, a well-known and widespread format almost ubiquitous even at that time, so broad support of fMP4 naturally came along shortly after.

All the difference between a regular MP4 and fMP4 is in the arrangement of elements describing the video and audio streams. In a conventional MP4 file, such elements are located in the end of the file, but in fMP4 they are put in the beginning of the file. As MP4 could natively contain multiple streams divided into separate data chunks, such a simple change made the file “infinite” for the player.

This very fMP4 property is used for live streaming. First, the player reads the description of the video or audio stream and then starts to wait for the data and display them (play back) as they arrive. If you generate your footage chunks on the fly, the player will be able to automatically play back a real-time stream at no additional effort.

And it does work! Of course, certain issues may arise at fMP4 generator implementation. To discuss them, let’s take an example, our DemoFMP4 app.

fMP4 Live Streaming on iOS

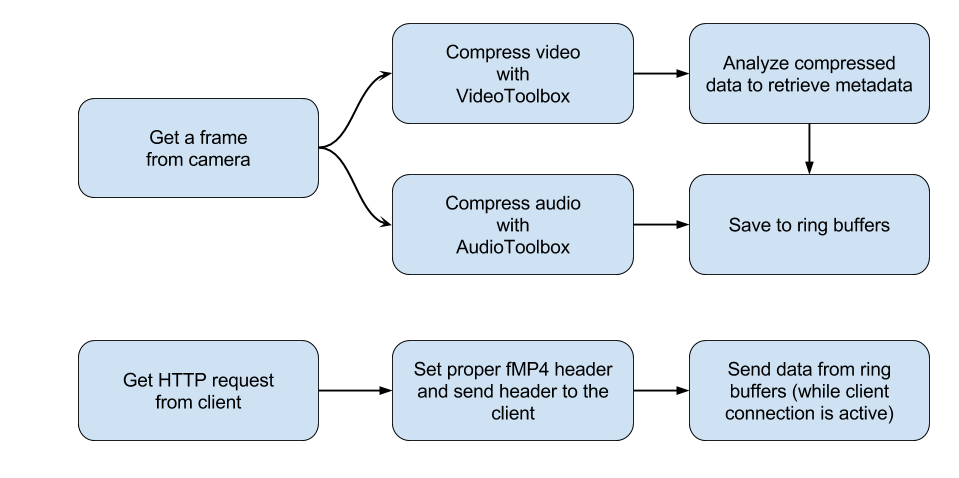

This is a demo app which takes the frames from the camera and sends them in the fMP4 format to the connected viewers. To connect to the device viewers have to request a virtual “MP4” file automatically generated at the following address: http://<ip-address>:7000/index.mp4.

For this the app launches a lightweight GCDWebServer which listens to port 7000 and serves requests to download index.mp4.

Here you may encounter several issues.

- First of all, video cameras output “raw” frames that cannot be transparently sent to the player: you should compress and format them properly first. Fortunately enough, starting from iOS 8.0, Apple opened software-based access to hardware video compression capable to generate H.264 chunks on the fly. For this purpose, VTCompressionSessionCreate and VTCompressionSessionEncodeFrame families of functions from the VideoToolbox framework, are used.

- Audio is compressed in a similar way: by the AudioConverterNewSpecific / AudioConverterFillComplexBuffer functions of AudoToolbox. This results in data chunks in the AAC format.

- Second, the camera outputs frames at a comparatively high rate. So we do not lose them, we keep the frames in the CBCircularData ring buffers sending the frames to compression as they are filled. Such ring buffers are also used to generate a chunked response, so the app is not keeping more than a preset number of frames in its memory (otherwise an infinite broadcast would require infinite memory to be allocated).

- Third, for proper operation of fMP4 you should correctly set the initial stream data (including Sps/Pps) in the MP4 file’s moov block. To do this, the app seeks through the H.264 blocks generated by the hardware encoder, finds the next key frame and fetches the value of Sps/Pps. Then, when generating the moov header, it uses them to properly time the stream in the player. Hence, from the player perspective the file is always shown “from the very beginning”.

- There is another problem: MP4 has its own data requirements, and can include properly formatted H.264 blocks only. We have solved this issue by attaching a wonderful library called Bento4 that helps you to repack the H.264 blocks into properly formatted MP4 atoms on the fly.

So we have made an app capable to deliver the stream almost latency-free from a device camera in real-time by sending an “infinite MP4 file” to any standard HTTP client. This approach results in a fairly small latency of 1-2 seconds. In view of broad support of fMP4 and ease of organizing such a broadcast (no ad-hoc server is needed), such client based generation of fMP4 is a simple and reliable solution.

True Real-Time Live Streaming

But what if we need “true real-time”, like in Skype, for example. Unfortunately, fMP4 is not the best fit for this. Although there is no master index file here (like in HLS) and video stream chunks are small, there are still chunks within the MP4 file. So, until such a chunk has been downloaded completely, the client would not be able to see the frames, so a light latency may emerge.

RTSP Live Streaming on iOS

Hence for true real-time meaning that the player gets a frame almost immediately after it has been generated by the camera, another format natively designed for streaming is more suited. We mean RTSP, which has also been well-supported among the players (for instance, it is easily played back by the well-known VLC player), and it is relatively easy to implement. To explain, let’s look at our sample app for RTSP-based streaming, DemoRTSP.

Unlike HLS and fMP4 which exchange data via HTTP, RTSP originally uses its own format on top of “bare sockets.” Also, RTSP uses two data channels, i.e., a control channel to exchange the control data between the client and server, and delivery channel to stream only the compressed data from the server. This slightly complicates the exchange model, but minimizes latency as the client automatically receives data as soon as they are sent by the server over the network. There is simply no intermediary in RTSP!

DemoRTSP constitutes a minimum set to implement such exchange. At launch time, the app starts to listen the service port (554) for connects from client players. On connect, DemoRTSP sends a response containing a simple line indicating a codec to be used to compress video and audio (for iOS this is a standard H.264/AAC pair) and the data port to deliver compressed frames from the server. Then the client connects to the “data port”, and plays back everything it receives from the server. In this model, the server never waits for anything and all the compressed frames are immediately sent to the player, ensuring the minimum latency.

It must be said that on the modern iOS devices compression and networking are not so resource-consuming. Therefore, apart from a simple streaming, additional stream handling before transmission is possible. For example, it is easy to overlay text, use video effects, or otherwise automatically change video as the app needs. For this purpose, both post-processing of the camera buffer or a more efficient OpenGL based approach can be used. Let us discuss this in more detail.

Live Video Effects on iOS

In our DemoRTSP app we have used a simple approach based on PBVision, but in your apps you can use more advanced solutions based on GPUImage allowing to apply to video stream a chain of OpenGL effects and bring latency almost to zero.

In DemoRTSP you can find an example of blur overlay on the stream.

Who has said Prisma-like video generation on the fly is impossible?!

You can go even further, and use the “quickest” way of image handling currently available in iOS – Metal, which came as a replacement to OpenGL (in the recent iOS versions). For instance, in the MetalVideoCapture sample you can see how to use the CVMetalTextureCache API to pass the camera capture to Metal Render Pass.

Besides Metal, the latest iOS versions came with a few interesting features. One of them is the ReplayKit where you can stream the device’s screen with just a couple of lines of code. This platform is pretty fascinating, but at the moment it does not allow to “meddle” with frame creation, cannot capture from the camera (only from the screen!) and is limited to built-in capturing features. Currently, this framework is not intended for full-scale controlled streaming, more resembling something “reserved for future use.” The same applies to another new popular development, support of the new Swift programming language in iOS. Unfortunately, it is not well-integrable with the low-level features needed for video and data handling, so it is unlikely it would be applicable to such tasks anytime soon, except for the interface.