This post is also available in: Russian

DENIVIP Media has been creating video platforms since 2008 (usually in conjunction with services, portals and applications). Over the 4 years of developing ad-hoc video platforms for different projects, we have accumulated a decent expertise and information on many hindrances that are almost inevitable in video projects. In this post, I would like to brief you on how we design video platforms and what drivers have to be taken into account when creating a new or upgrading an old video platform. Also, I would like to note major forthcoming trends in the development of video platforms foreeable for the near future.

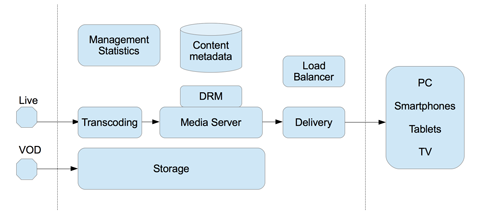

In our understanding, the video platform consists of the following key modules:

- Video balancer is a service that responds to the user where to pick up a particular video

- Video Delivery Server is a caching edge server that outputs the video

- Media Server is a server that prepares video for delivery

- Transcoder is a service to prepare video to a proper format with encoding parameters needed

- Storage is the system that stores data

- DRM is the Digital Rights Management system

- Video Player may be Flash, iOS, Android, and TV

- Management system and content metadata

Video Balancing

While building large and complex video services it is critical to pay sufficient attention to the quality of video delivery and optimal use of the infrastructure. In almost all of our projects, we use our C++ based video request balancer which receives a link to the video stream and responds by data on the video server that can deliver video to the user in the best possible way. We have written this module in C++ to attain the maximum performance.

In most cases, the balancer is the most important part of the video platform. The main arguments for its use in our projects were the following:

- Round Robin distribution is not effective as in case of VOD it is important to forward the user to the server where the content is available in the cache

- In case of Live/DVR it is very easy to link certain TV channels to individual server groups

- Connection and disconnection of servers and server groups for maintenance purposes

- Real time load distribution

- Automatic server withdrawal from rotation on exceeding of a load limit

- Dropping of peak traffic to CDN networks, flexible traffic management across different CDNs, balancing between CDNs

- Graphical user interface with real-time statistics

At this stage, for me it is hard to ever imagine how even a project consisting of two video servers can be implemented without a balancer. 🙂 For instance, at the initial phase load balancer can help you serve users with a local server or test environment, and then gradually move the traffic to the Amazon cloud or a third-party CDN.

Video Delivery Server

Over the past two years, we have not had a single project with video delivery protocol other than HTTP. All projects use Adobe HDS and Apple HLS to deliver video content. In this model, the video server has to get a request for another video fragment, check for its availability in the cache, request it from the media server, store it in the cache (if necessary), and then send a fragment to the user.

A really nice facilitator here is the fact, that such a task can be handled by any well-optimized Web server (we use Nginx) or cache server (e.g., Varnish). Such server can be deployed very quickly, and with Amazon you can create traffic delivery point in almost any region.

Here are the main constraints to video delivery server performance:

- Network Interface Performance

- Amount of RAM content from the cache stored in RAM

- Hard disk performance (IOPS)

- Hard disk capacity

Given these constraints, the number of delivery servers can be estimated based on the total bandwidth to be served (average video bitrate * number of users in the peak hours), to determine the total amount of content to be stored in the cache (probably, this is equal to the total storage capacity). Hard disk performance impacts the number of unique content unit that the server can deliver in parallel without generating issues and additional delays.

Media Server

At this point, we have two nice media servers on the market: Adobe Media Server 5 and Wowza Media Server 3.5. Both are great products. The first is high-end and expensive, and the second is cheaper. In all our major projects we used AMS5 to ensure stability and reliability. In smaller projects, where cost savings are important, Wowza also showed good results. Especially helpful are its functions of video conversion for live video streaming. The choice of the media server is often conditioned by DRM requirements: in our projects, it is usually Adobe Access 4.

In essence, the load on media server is proportionate to the number of video streams it receives and the number of video streams it delivers (caching efficiency of video delivery servers). For instance, abrupt drop in caching efficiency can lead to failure of the entire video platform, so you need to closely monitor how many requests arrive to the media server.

DRM

Generally, DRM requirements are imposed by copyright holders and are a prerequisite for licensing of content. In projects where we have been involved to platform creation, we used Adobe Access 4, Windows RMS or Marlin. Recently, the interest in Widevine has grown (usually to deliver video to TV devices). For browser based viewing, Adobe Access 4 is likely to be the best option currently (no additional plugins needed); for iOS and Android there is no longer such a benefit, but if Adobe Access 4 is already used in the project, applying it to other devices is also convenient. If TV output is needed, you can hardly do without Widevine / PlayReady / Verimatrix.

It is worth noting that DRM consists of two parts: the license (license servers) and encryption (usually on the media server level). An interesting ongoing trend is to move encryption to the transcoder level (including packaging). In our opinion, this is a promising trend as it can lead to a more efficient infrastructure.

Transcoders

To prepare a powerful stream of new content, sufficient transcoding resources are required. In case of VOD, the power of transcoding farm determines how fast the service content becomes available. In case of Live streaming this is determined by the maximum number of parallel video broadcasts. Knowing the amount of content and its characteristics, as well as the number of different bit rates to be prepared, you can determine how many transcoders are needed.

In most cases, efficient estimate of transcoder capacity is possible only by real testing on real content in the planned configuration. In our opinion, very promising are the Elemental transcoders that are using the GPU. In our projects, we often use Harmonic Rhozet transcoders and are also very happy with them.

Building of a stable infrastructure on open-source ffmpeg/avconv transcoders is also possible, however it presents a non-trivial engineering task. The cost of its implementation may exceed the value of commercial transcoders.

Storage

As far as Storage is concerned, it is all quite easy. You just need to define capacity (how much content to store) and performance (how many content requests from the media server to process). First of all, the total storage capacity equals to the volume of source content (if you need to store it) plus all resulting bitrates of content units. In case of Just In Time (on-the-fly) packaging this would be sufficient. In case of pre-packaging of content, each group of devices will need a separate set of resulting bitrates (at least Adobe HD for Flash/AIR and Apple HLS for iOS).

If the storage is small, it is essential to monitor efficiency of caching so that the media servers are overloading the storage. For instance, it could be an annoying situation when the storage is small (physically small disk capacity) and the project is highly popular (large number of viewers). In this case, if the caching efficiency is low, the media servers can quickly overload the storage with requests.

Video Players

Development of video players and applications is generally one of the most fascinating issues. Here is where the efforts we have applied to all parts of the video platform come to fruition. Technological restrictions are usually dictated by the level of adoption of a given technology by the end users (e.g., the Flash plugin), and the requirements to support DRM. In our projects, for browser versions of our video services we create video players based on Adobe Flash (as it is widely adopted, and Adobe Access 4 is a very popular DRM system, at least in our region). We are also experimenting with HTML5 video players. It is a pretty attractive technology, however it has not yet fully matured for commercial use.

To develop Android applications, we use Adobe AIR: this allows us to somehow to level out all the diversity of versions of Android OS and the range of device models from different vendors. It means that largely we delegate to Adobe some problems relating to cross-platform video support. But sometimes it has also been necessary to make native Android applications. Creating of HTML5 Web applications for Android has not proved efficient, as cross-device layout for Android can be pretty non-trivial, especially if it includes a Flash component (however, this is not supported by Adobe anymore). Moreover, Web applications are far from ideal, as browser operation and optimum user experience do not correlate very well.

Both in case of Flash and AIR for Android, support for Adobe Access is implemented on the basic OSMF framework without any substantial effort.

As far as iOS applications are concerned, we use native tools to develop them. iOS users are very sensitive to interface quickness and usability, animation and level of interactivity of the applications. So the effort is long and costly, but the potential is very high here. At the very least, to save time you always can compile an AIR app for iOS. When developing iOS applications it is very important to check for compliance of video stream parameters with the Apple requirements.

For TVs, each time you usually have to develop something unique on something like JavaScript and HTML. Often, they can accept video sources in MP4 or Apple HLS formats .

Bottom line In our opinion, the outlined approach corresponds to the main industry trends.

- Essentially, the balancer and caching servers are DASH ready.

- In AMS and Wowza, DASH support will be available shortly.

- Transition to H.265 is also in no way complicated (most effort is to enable hardware support on the device level).

- All modules can be deployed in the cloud; moreover, we have already made them available as separate services from the cloud.

In the next posts, we are going to discuss how to provide metadata to video players, how to create interactive interfaces, describe features of different DRM solutions and iOS DASH video player. Stay tuned! 😉

PS We will also be happy to know what specifics and hindrances you have encountered in your projects, and which solutions you have chosen.