This post is also available in: Russian

Flash Media Server provides excellent functionality to create online video services. One of the most popular video services on the Web is live video. As video origin, Flash Media Live Encoder is often used. This is an excellent freeware encoder, although it has some limitations. In this post, we will discuss how to enable live streaming with Flash Platform products and how to overcome FMLE limitations of 3 bitrates per multi-stream.

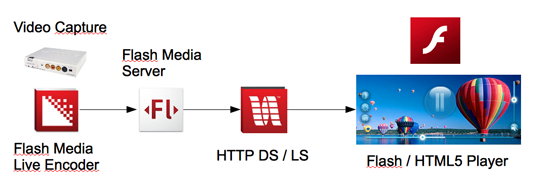

First of all, let us consider a typical live streaming flow chart. It consists of a video capture device, Flash Media Live Encoder, Flash Media Server and Flash video player. Using Flash Media Server as a central node for processing and distribution of video streams allows you to create unique services, with closely coupled business logic and multimedia content. Moreover, the new release will support streaming to iOS devices (supporting Apple HTTP Live Streaming).

Video Capture Device

As video capture devices, you can use affordable PCI Express video cards (for instance, BlackMagic DeckLink SDI) or Canopus ADVC 110 (connected via a FireWire adapter). When configuring such a system, it is critical to support the highest possible processing power in the encoding server. Based on our experience, an eight-core server (2 Quad Core CPU) is sufficient to encode three H.264 streams with a total bitrate of 7.5 Mbps. In the industrial solutions, hardware encoders and transcoders are most commonly used (Digital Rapids, Harmonic, etc.). In this case, please pay attention to the small but very useful features: Absolute Time Code (ATC), MultiPoint Publishing, backup, and stream recovery on disconnect.

Flash Media Live Encoder

Flash Media Live Encoder is a free H.264-based live stream encoder. It connects to a video capture device and generates 1, 2 or 3 RTMP streams (dynamic bit rate) to enable smooth bitrate switching. Three bitrates are basically not enough to enable delivery to multiple devices in modern projects. Cell phones, smart phones, tablets, TVs and STBs — all they have different screens and Web connection settings. Ideally, about 10 bit rates are needed to cover all device types. To overcome this limitation, you can use online transcoding by ffmpeg to multiply a single stream to the required number of bitrates. Below we will discuss in detail how to do this.

Flash Media Server

A benefit of Flash Media Server is that it requires the least effort on the part of system administrator. It has an easy-to-use installer which does not even require editing of configuration files after the installation, in most cases. This is not our case, though. Our task is to get the most out of the server, and here Flash Media Server also excels. If you need to save money on a media server, you can use the Amazon version of Flash Media Server.

Flash Media Server supports live streaming by RTMP / RTMPE (Flash), HTTP DS (Flash), and HTTP LS (iOS). HTTP-based protocols feature a higher stability at low bandwidth and support content caching at a pause. The advantage of RTMP is that Flash Player supports built-in RTMP interactivity to transfer metadata, objects and remote procedure calls.

The main purpose of Flash Media Server is providing of high-performance multi-format content output to the users and running of additional content protection services (Flash Access DRM), DVR (video stream rewind, pause) etc.

Video Player

Depending on the platform, any video player could be used: HTML5, Flash, Android, iOS… We try to create video players for all available technologies, although in most cases compiling the Flash player for a specific platform is sufficient. Also please keep in mind to modify the management functions across multiple platforms.

Online transcoding of RTMP streams using FFMPEG

We now turn to the practical part of building an unlimited multi-bitrate video streaming solution using free tools. We assume that the build is made from the source code, although it is possible to install packages from specialized repositories.

Building ffmpeg with H264 support. Live stream transcoding using FMS and re-publishing of multiple streams.

1. To build ffmpeg, you’ll need the following packages:

1 | yum install git make gcc gcc-c++ pkgconfig yasm |

2. Building librtmp

1 2 3 4 | git clone git://git.ffmpeg.org/rtmpdump cd rtmpdump/librtmp make make install |

3. Building libfaac & libmp3lame

a) libfaac

Go to ttp://www.audiocoding.com/downloads.html and download faac-1.28.tar.gz

1 2 3 4 5 | tar zxvf faac-1.28.tar.gz cd faac-1.28 ./configure make make install |

b) libmp3lame

Go to http://lame.sourceforge.net/download.php and download lame-3.98.4.tar.gz

1 2 3 4 5 | tar zxvf lame-3.98.4.tar.gz cd lame-3.98.4 ./configure make make install |

4. Building libx264

1 2 3 4 5 | git clone git://git.videolan.org/x264 cd x264 ./configure --enable-shared make make install |

5. Building ffmpeg с h264

1 2 3 4 5 6 7 | git clone git://git.videolan.org/ffmpeg.git cd ffmpeg export PKG_CONFIG_PATH=../rtmpdump/librtmp/ ./configure --enable-debug=3 --enable-librtmp --enable-libmp3lame --enable-libfaac --enable-nonfree \ --enable-version3 --enable-gpl --enable-libx264 --enable-libfaac make -j4 make install |

To verify your build, you can use Flash Media Live Encoder (FMLE) to publish your stream to the basic live application of Flash Media Server (FMS). Alternatively, you can make a SSAS application to generate online video stream from a local file.

Next you’ll need to run ffmpeg to take a maximum-bitrate video stream and generate multiple lower-quality streams in real-time. If you need to run ffmpeg and FMS on the same server, do the following:

1 2 3 4 5 | ffmpeg -threads 15 -i "rtmp://localhost/live_channel/maxlive live=1" \ -re -acodec libfaac -ar 22050 -vcodec libx264 -s svga -b 500k -f flv "rtmp://localhost/live/test_500" \ -re -acodec libfaac -ar 22050 -vcodec libx264 -s vga -b 300k -f flv "rtmp://localhost/live/test_300" \ -re -acodec libfaac -ar 22050 -vcodec libx264 -s qvga -b 150k -f flv "rtmp://localhost/live/test_150" \ -re -acodec libfaac -ar 22050 -vcodec libx264 -s qqvga -b 50k -f flv "rtmp://localhost/live/test_50" |

Here the source is a live stream maxlive taken from the live_channel application run by a local FMS.

To the local FMS, the four already recoded streams with different bitrates and frame sizes, are republished.

To test these streams, you can create the following f4m file:

1 2 3 4 5 6 7 8 9 | <?xml version="1.0" encoding="utf-8"?> <manifest xmlns="http://ns.adobe.com/f4m/1.0"> <id>Dynamic Streaming</id> <streamType>live</streamType> <media url="rtmp://FMS_IP/live2/test_50" bitrate="50" width="160" height="120" /> <media url="rtmp://FMS_IP/live2/test_150" bitrate="150" width="320" height="240" /> <media url="rtmp://FMS_IP/live2/test_300" bitrate="300" width="640" height="480" /> <media url="rtmp://FMS_IP/live2/test_500" bitrate="500" width="800" height="600" /> </manifest> |

You can now play back this file F4M file with an OSMF-based video player, for instance, StrobeMediaPlayback.

Here are a couple of useful links on the topic: