This post is also available in: Russian

In our previous articles, we have examined in detail the mechanisms of caching HTTP Dynamic Streaming content using NGINX Web server. However with large-scale projects, such approach alone is often insufficient. In this article, we will discuss building the high-performance HTTP Dynamic Streaming Content Delivery Systems.

While building an HTTP Dynamic Streaming platform for video content delivery, the following issues may occur:

- If your portal hosts several caching servers, and users regularly access a variety of video files, this necessarily entails continuous rotation of Web server cache and, consequently, overall performance degradation. If you use a hardware load balancer based on Round Robin, it is almost impossible to evenly distribute the load across the servers, as users are viewing content at various bit rates.

- Continuous access of Origin-servers (Apache + f4fhttp module) to the Data Storage may affect its life cycle duration, especially with SSD drives.

- An important task of content streaming is to choose an optimal site for the user to download from. This is in no way a trivial task, if your servers are distributed across several sites that peer with different providers.

To tackle such issues, experts need intelligent balancing of user load over the HTTP Dynamic Streaming video servers. Leveraging extensive expertise in building large-scale content delivery systems, our experts have created a high-performance video platform based on our C++ server load balancer and NGINX Web server which could be a very effective HTTP caching proxy solution.

The basic functionality of the system:

- Balancing of requests across the servers based on content availability in the cache (content aware load balancing)

- Balancing between distributed sites based on geo-location (e.g., with an external database of IP addresses) and based on routes

- Balancing based on utilization of links to video servers

- Traffic prioritization for Premium users and Premium content (balancing based on content type and user type)

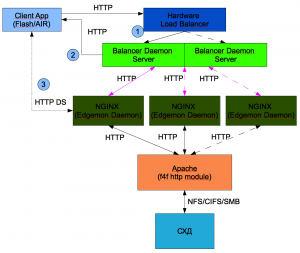

The system consists of two main components: Balancer Daemon (balancer) and Edge Daemon (video server).

Edge Daemon is a background application to track changes in the NGINX cache directory. It sends the data on video segments available in the server cache to Balancer Daemon via HTTP. To assess the level of the server load, Edge collects the following data and transmits it to the balancer:

• Average system load

• Free RAM

• Loading of network interfaces and units

• NGINX module statistics HttpStubStatusModule

Edge daemon runs on each caching NGINX server. For ease of the daemon configuration, an Xml file is used.

Balancer Daemon is an application that runs on a dedicated server. It collects and analyzes the data on the caching server load. Based on the analyzed data, the optimal HTTP DS content server is chosen for each customer. If an application fails to obtain statistics from the Edge Daemon service, it temporarily ceases to send queries to the server. This provides a simple and flexible way to withdraw the caching servers from the rotation (for example, for routine maintenance) at no degradation of the overall system functionality.

To improve fault-tolerance of the system, we used multiple Balancer Daemon servers in conjunction with a HealthCheck-enabled hardware load balancer. In the event of failure of one of the balancers, it is simply withdrawn from the rotation at the network level.

For the portal users, the balancing mechanism is completely transparent and does not cause delays in video download.

ПLet’s now take a closer look at interaction of the client application (Flash/AIR) and the HTTP DS load balancing system:

1) The player sends a request to the Virtual IP address of the hardware load balancer that forwards it to one of the HTTP DS balancers

2) The balancer analyzes, which of NGINX servers has got this content in its cache, analyzes the client IP address and the overall server load. Based on this data, for each customer the optimal NGINX server is selected to output the content.

3) The balancer returns to the player the address of the server to download the content from.

To manage the pool of caching NGINX servers, our experts have developed an AIR application enabling online retrieval of statistics on the server performance and the overall system utilization. For system management purposes, the servers can be quickly added, removed or withdrawn from the rotation.

Thus using HTTP DS Load Balancer can substantially increase the proportion of content delivered from the Web server cache, improve reliability and fault-tolerance of the system as a whole, while enabling a higher quality of service for the users.