This post is also available in: Russian

All video services are similar in two key aspects. Even with a relatively small number of concurrent viewers the load may become an essential issue. Also, the users may opt to abandon the service in case of faulty video delivery. The issues with video delivery are often relating to high load and suboptimal use of resources. To address such challenges in our projects, we used a special video load balancer module. In this post, we are going to provide you with details relating to the balancer and building of efficient video platform by optimal load distribution.

The main driver behind developing our native load balancer was ultimate lack of adequate tools to enable optimal load distribution on video platforms. We have not found even a single tool to address such an issue. Hence, in 2008 we began to experiment with load balancing on video platforms using on our own C++-based balancer.

First of all, we decided that the use of low-level load distribution algorithms (TCP Round Robin, DNS Load Balancing) would be in no way helpful to us, as to select an optimal video server you have to know details of the requested content. So we included the balancer access a separate step in initializing of video playback. Each initialization of the video player accesses the balancer to determine the video server to handle the service. In some cases, balancing can be performed by the backend while preparing the video player HTML page (by inserting a link to the video server) or during data output to the video object (for instance, via JSON).

The main tasks of the algorithm of video server selection are as follows:

1. Ensure distribution of customer requests across multiple available servers (in general, there is a variety of different tools, both software and hardware/software, but they fail to support all features of video content delivery)

2. Ensure efficient use of platform infrastructure, i.e., based on intelligent load distribution increase the maximum number of users served by a platform (compared to plain "round-robin"), without degrading the quality of video delivery

3. Automatically maintain the quality of service (disable faulty modules, smoothly augment load on new modules)

However the first and the third points are pretty clear – there are algorithms, protocols, system parameters, efficiency estimation, addressing of challenges associated with point two involved much more effort.

The popular software products like Varnish or NGINX can distribute client requests based on simple rules, but still fail to enable efficient balancing between the cache server and the video platform.

In several large projects, we have studied traditional aspects of video service load balancing. However the load is non-uniform, it is cyclical (by day, week, month), as the content popularity varies with users and is affected by many external factors. Strictly speaking, such issues do not exclusively pertain to video content only, but as most of video belongs to entertainment, they are much more pronounced.

The results of our tests suggest that the same amount of traffic can be distributed with much less effort (in terms of server load and platform operation cost). An obvious way to improve efficiency of a video platform is to distribute users among video servers that have the requried content in cache (preferably, in the RAM).

At the moment, most of the traffic used in our projects incluldes content delivered via HTTP Dynamic Streaming and HTTP Live Streaming. Sometimes, HTTP Progressive Download is used, however just to support older ConnectedTVs. So, in terms of caching, a unit of content is a sequence of files (chunks) 0.5 – 10 MB in size (depending on the duration and bit rate of the video fragment containing each file).

In some projects, the situation was aggravated by sharp load peaks attributable to TV advertising for a wide audience, or start of a popular sporting event. Currently, our balancer runs in projects with the following maximum load parameters:

- 40 edge video servers

- 40 Gbps traffic at peak

- 80 terabytes of content

- Live and VOD content

Architecture

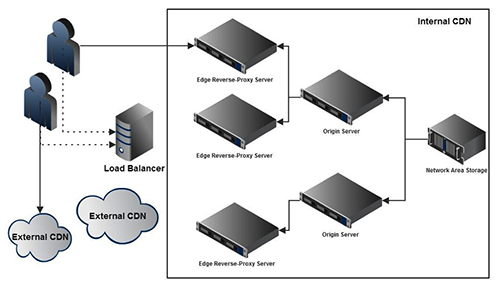

In a typical architecture, the video platform share with a balancer a common CDN, and, probably, some third-party CDNs. The balancing system consists of two elements: 1. Video Load Balancer is a load distribution service, identifying the optimal video server based on the statistics collected 2. 2. The service running on the video server (i.e., on the Edge Reverse-Proxy Server) and providing the necessary statistics to the balancing service.

After launch, the balancer collects information from all edge servers relating to what content they have cached, what is the level of use of system resources on the server (network, processor, hard disk). Based on the statistics collected (which is regularly updated) the balancer decides what content it is better to output from each server. Scalability is achieved by installing several balancers and distributing traffic between thems using TCP Round Robin.

Load balancing algorithm The load balancing system distributes users by the list of CDNs available to it. In terms of balancing, there are two types of CDNs:

- Internal – native service infrastructure (including its servers, Amazon EC2 cloud, etc.)

- External – third-party content delivery networks (Akamai, Limelight Networks, Level 3)

When balancing inside the native infrastructure, the balancer selects a specific video server (or its instance in the cloud) to serve the user’s request. In case the load balancer decides to forward the user to an external content delivery network, the routing is performed by the routing core of a given network.

The rules for selection from numerous available CDNs are determined by a number of parameters and allow you to adjust distribution of user requests according to specifications of a particular project. Given the cost of traffic passed through external CDNs, you can flexibly vary the priorities of service users depending on their status, geographical location and state of the requested units of content, which may affect the overall quality of service and operating costs of the project. So, sometimes you may need to send only premium content to CDN (for instance, paid videos) or premium users only (such as subscribers). This way, you can ensure efficient use of costly CDN services. Another purpose of cost optimization may be to forward to a CDN such traffic that exceeds capacity of the internal platform (short peaks).

For instance, a typical problem to optimize the costs of video delivery can be solved by combining the following three infrastructural approaches:

- Full-scale servers with a guaranteed leased line. They serve the average load and are busy 95% of the time)

- Dedicated servers (instances) to process peak traffic (at a certain stage, guaranteed bandwidth rental may become too costly, as you still need to pay the rental for off-peak hours)

- A commercial CDN network to support large-scale events (this may be needed to ensure more cost-efficient terms of traffic delivery; at Amazon the cheapest traffic in its price list is offered for $0.05)

Manual setup of such distribution may take a long time, moreover you will need to constantly update it to match the current load profile. We solve such issues by using a balancer that can intelligently allocate users to levels based on to the current load on the project, which eventually ensures a substantial level of cost-efficiency.

The main purpose of intelligent distribution of users across the servers of your native video platform is to improve efficiency, i.e., achieving of the maximum number of users served by the platform, and improving delivery of video content. Based on the data from many projects, we identified features of load, allowing for near real-time re-distribution of users between servers, hence maintaining the quality of service and reducing the load on the platform as a whole.

The key parameters that may affect the balancer’s decision to select a specific content delivery server, are as follows:

- The presence of content in the cache. If the content is in the cache, the Edge server is not creating any load on the network storage and an origin server. By scaling the platform by the number of Edge servers (i.e., maximum number of concurrent users), we are less likely to face performance constraints of the network storage.

- Edge server workload. Here we take into account the load on the network interface, the processor and the disk subsystem. Performance of the disk subsystem determines how many unique units of content can a given server output simultaneously. The network capacity determines the maximum number of viewers that can be served by a given server. In turn, the amount of RAM you have, determines how often you have to access the hard drive to deliver the content.

- Choosing of an optimal route from the user to a specific Edge server. It is made based on a routing table that classifies CDN Edge servers into groups.

- Availability of the requested content unit in the list of currently viewed content. In this case, the probability of getting into the file cache of the operating system increases drastically; the same applies to the level of caching of popular content units on a smaller number of Edge servers. As a result, the load on the disk subsystem is reduced and the number of service users is increased at no effect on the quality of service.

In practice, it might be necessary to modify video server selection algorithm based on the information provided by the users. For instance, by numerous reasons the player may deem faulty (e.g., not available) the video server to which it has been forwarded by the balancer. In such a case, the player can re-query the Edge server with video servers that fail to meet the QoS benchmark, and receive alternative servers in the response.

Almost in any project, we used a fascinating feature to quickly withdraw users from a video server without any noticeable disturbance of the viewing process. As a result, for ease of administration we implemented the functionality of forced withdrawal of Edge servers from rotation. In particular, in preset intervals the player can send to the balancer requests to verify the status of the Edge server, and, if necessary, query another Edge server. This allows to rapidly purge clients from servers that need maintenance.

Hardware platform

Specific hardware requirements depend on your intended operating conditions. In case of VOD delivery, we recommend you to use servers with a fast disk subsystem (SSD is preferred) and a decent external channel (for example, a bond of 4 network interfaces 1Gbps each). By properly configuring the balancing system, you can achieve high caching performance: the ratio of outgoing traffic from Edges and outgoing traffic from NAS in various VOD projects may exceed 10:1. In such a case, cache rotation decreases and the number of write cycles is reduced, which is critical for SSD drives. The caching server processor performance is irrelevant. The amount of required RAM is also entirely dependent on the intended load and the need to increase performance by RAM caching.

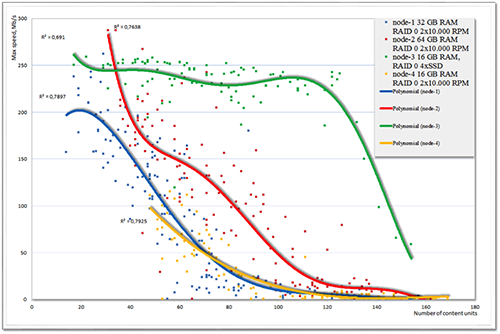

The figure below shows dependence between the rate of VOD content fragments output (in Mbps) and the number of content units viewed in parallel from one server. The servers differ by their RAM capacity; also, among other things, in node-3 (green link) RAID 0 containing of four SSD is used as a storage. The size of file cache on all servers is identical. For your convenience, the graph presents approximating curves reflecting common trends.

Until the set of simultaneously distributed content units can be efficiently processed by the OS file caching mechanisms, node-2 with 64 GB of RAM is more efficient. In this case, the maximum number of users that can be served by a given content delivery node is limited only by the capacity of its network interfaces. As the number of simultaneously distributed content units grows, the probability of failure of RAM file cache grows, which on the graph is reflected by a rapid decline in performance of all servers except SSD-based servers. The latter, in its turn, will robustly hold the load until the disk cache starts to be intensively updated as the number of concurrently distributed units of content exceeds the number of content units that can fit in the cache. This is due to a fundamental gain in speed of random read on SSDs compared to conventional hard drives.

Based on real tests, the balancer can handle more than 3,000 content requests per second. (4 worker threads on Intel (R) Xeon (R) CPU E5540@2.53GHz). Scaling is achieved by adding extra balancers and distribution of load between them based on such tools as TCP Round Robin. In this case, under the same conditions the balancer running on Micro Instance Amazon EC2 can handle up to 800 requests per second. If the number of worker threads is equal to the number of cores, performance grows almost linearly. As the number of Edge servers handled grows, memory consumption by the balancer grows linearly; however, response time varies within the measurement error.

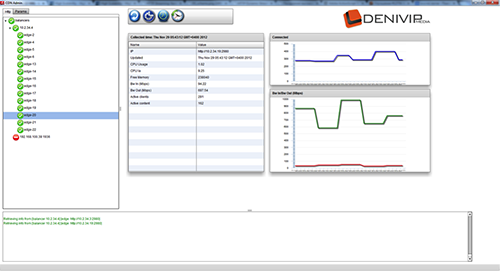

Balancer API All statistics, used by the balancer to forward customers, is also available from API. You can use the interface directly from your backend systems or monitor and analyze statistics using a special GUI (see the as snapshot below). This utility has been implemented based on the API balancer. In particular, in addition to monitoring the state of the platform, you can manually reconfigure a range of balancer parameters on-the-fly.

Another option for monitoring and aggregation of statistics is to use a third-party balancer log analysis tools.

Here are the key statistical parameters that we have most frequently accessed via the API balancer:

1. The number of requests to the balancer per second

2. The number of customers per each Edge and totally on the platform

3. The number of unique units of content output concurrently

4. The total traffic from each Edge and from the platform as a whole

5. The values of broken edge counters.

Summary The basic functionality of the current balancer release is as follows:

- Choosing the most effective server to process a given user request

- Cost optimization for content delivery

- "Dropping" peak traffic in excess of the capacity of native infrastructure, to CDN and cloud infrastructures (instead of the overall video delivery degradation)

- Dynamic video platform reconfiguring on-the-fly

- Manual traffic control

- Identifying infrastructure issues (broken edge)

- Real-time statistics

Roadmap for the nearest releases:

- Refinement of the balancing algorithm – the maximum localization of the cached content units. Design of logic based on the existing load statistics to enable the maximum platform capacity per user at minimal financial costs (self-learning algorithm). Enhancing the overall stability of the system: after random surges, the system should quickly revert to a stable cache state.

- Enhanced integration with external CDNs – tracking of balancing statistics from third-party CDNs (budget limitations, alerts, etc.).

- Development of the statistics collection and visualization tools

- Integration of cache location services in balancing algorithms (to enhance the current route-based mechanisms)

Looking forward to details of your projects and your specifics of video traffic distribution.

Commercial terms The content balancing system is available in the following configurations:

- License for Amazon EC2 balancer instance – $5 per month (plus the cost of the standard Amazon EC2 service)

- Balancing service run on our infrastructure – $200 per month (500 balancing events per second = 30,000 video launches per minute )

- Unlimited license to install on any server – $4,500.