This post is also available in: Russian

Apple iOS mobile devices have deservedly won universal popularity among the customers. Every Apple’s event is followed with a keen interest as the people expect the emergence of new functionality and improvement of existing features. But the common users can only skim the surface, assessing the externals of the product. Much more is visible to the developers. Of all the four-day WWDC event, the public attention has been given to the keynote presentation only, giving an overview of all the major innovations. All the other presentations, totaling to about one hundred, have been dedicated to the developers. From this ratio, we can estimate the hidden mass of the iceberg, which is no less fascinating than its tip. Starting a series of posts on iOS based development, we will tell you of the Apple devices built-in frameworks to support online video handling.

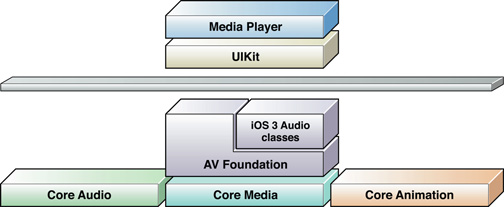

The hierarchy of iOS video frameworks includes the following libraries:

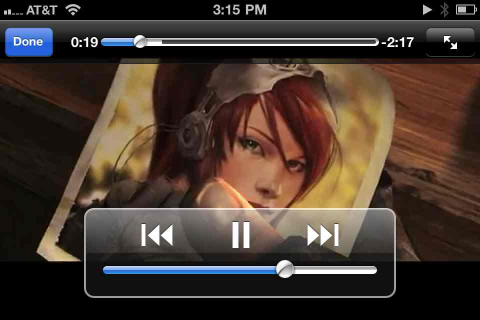

- The Media Player Framework is an easy to use framework supporting most of the features "out of the box". It is sufficient for most applications and enables a familiar user interface.

- AV Foundation Framework provides a complete set of features to control video playback, allowing to implement a player of any level of complexity. Moreover, it includes features for audio and video shooting, editing, recording and transcoding. In this article, we’ll focus on playback.

- Core Media and Core Video Frameworks define the low-level data types and system interfaces to manipulate with the media content.

Media Player Framework

Media Player Framework provides the

1 | MPMoviePlayerController |

class implementing a video player that is fully ready to be used in native applications and is virtually identical to the standard Video application.

In its simplicity, you can run the player with just a few lines of code with the

1 | MPMoviePlayerViewController |

class. In this case, the player controller is opened and the application interface becomes unavailable to the user until the player is closed:

2

3

4

[[MPMoviePlayerViewController alloc]

initWithContentURL:[NSURL URLWithString:media_url]];

[self presentMoviePlayerViewControllerAnimated:theMoviePlayer];

In a little more sophisticated version, an instance of

1 | MPMoviePlayerController |

is initialized by a content link. After that, the view property can be added to the hierarchy of the current controller. Similarly, in this case the player is displayed in a full screen mode, but now you can add your own components on top of it.

Also, you can subscribe to alerts sent by the player and respond to them in your code when a user makes the following actions: start playback, pause, rewind, start and end playback using AirPlay, change of the zoom mode, switch to full screen, change video download status, and retrieve metadata. All same action can be run programmatically using the

1 | MPMediaPlayback |

protocol.

The Media Player allows you to play back your content in the background when the application is minimized or the device

screen is locked. For this purpose, in the application configuration, you have to set the UIBackgroundModes parameter to

audio and specify the audio session category

1 | AVAudioSessionCategoryPlayback |

using the

1 | AVAudioSession |

API class.

The issues with this framework begin when you try to modify the functionality of the player, implement some feature missing from the standard player or change its visual layout. In such cases, a life-saver for the developer is the AV Foundation framework.

AV Foundation Framework

Although the AV Foundation has no ready-made visual components such as

1 | MPMoviePlayerController |

, it provides a more sophisticated application programming interface for media data handling, allowing you to implement your own player entirely from scratch.

Here are the framework core classes that are used for playback.

1 | AVAsset |

is a core class that describes a media content unit as a whole: its tracks, metadata etc. What’s important with this class, is that it may not be ready for use immediately after initialization. Many of the content properties, such as duration, availability of tracks and even the playback capability, become available asynchronously or may even not be obtained at all, for instance, if the content is unavailable for download over the network. The state of the asset playback has been separated into a separate

1 | AVPlayerItem |

class, which allows you to concurrently play back the same asset independently in several players. The instances of

1 | AVPlayerItem |

class are serialized with the

1 | AVPlayer |

object, forwarding output to a special Core Animation layer of the

1 | AVPlayerLayer |

type. To this layer, you can apply all the effects of design, animation and geometric transformation that are applicable to the common layers. A brief overview of the minimum code needed to play back video, is shown in the developer guide section of Putting IT all Together: Playing a Video File Using AVPlayerLayer.

In addition to the basic playback functionality, the AV Foundation and other iOS frameworks provide a multitude of other features so you can do virtually anything you can imagine with your media content. Here are the most fascinating features.

The

1 | AVAssetImageGenerator |

class allows you, independently of the current playback, fetch from a video resource its individual frames, frame sequences or thumbnails for preview.

The

1 | AVAssetReader |

class helps you to get the most complete control over the content playback. With it, you can read and get individual samples of audio and video tracks tailored for further processing. Then, you can process these samples in any way and show them to the user or write them to a file using

1 | AVAssetWriter |

. For instance, you can add sound effects, display a visual representation of an audio track, add various filters to the video, pull a running video on a 3D object surface as a texture, etc. In general, the possibilities are limited by the developer’s imagination and the rate of frame processing, as

1 | AVAssetReader |

can not guarantee reading of samples in real time.

The Asset object can contain multiple audio track and subtitle alternatives, for instance, to support different languages. Using the

1 | [AVPlayerItem selectMediaOption:inMediaSelectionGroup:] |

method, you can choose among the alternatives.

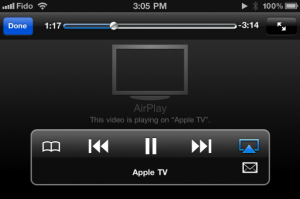

With the AirPlay technology, developers of applications for mobile devices can use a TV connected to the Apple TV 2 STB in order to mirror the main screen and to show extra content. In the AV Foundation framework, interworking with AirPlay is enabled by the AVPlayer object property called

1 | usesAirPlayVideoWhileAirPlayScreenIsActive |

, allowing to enable this technology with a single flag. AirPlay can run not just with the native applications, but in the Web environment as well.

The

1 | AVSynchronizedLayer |

class allows you to synchronize time in other application layers with the content playback. This might be helpful, for instance, to link animation rate of interface elements to the video playback rate or to run animation back at video rewind.

Apple HTTP Live Streaming

In our blog, we usually pay a great attention to HTTP Live Streaming. This technology is key to developing of applications for viewing online video. At the transport layer, it provides all the advanced functionality beyond just playback, which is available using the AV Foundation. The HTTP LS standard has been developed and patented by Apple. It is gaining immense popularity in live video streaming, as it has a number of features making it the technology of choice in video delivery over the network. It is supported by most of mobile and video devices, such as TVs. First of all, the new features described in the standard, emerge on the Apple devices. A major benefit of HTTP LS is its ability to automatically adapt the video stream to the bandwidth available. Other features enabled by this technology, include:

- Live video streaming with a sliding window over the latest N hours.

- Encryption of transmitted content and secure authentication of viewers over HTTPS.

- Interoperability with most of the content output servers, media distributors, caching systems, routers and firewalls, as the protocol runs on top of HTTP.

- Alternative streams may be used not only for multi-bitrate, but to enable fault tolerance also. In your playlist, you can put two streams served by different data centers, and the application will automatically select that stream which is available at the moment.

- Transfer of metadata linked to playback time. This feature can be used, for instance, to inject an advertisement or inform of a next TV show.

- Subtitles.

- Fast rewind and scrubbing by key frames.

Apple Application Requirements

When validating applications prior to their publication at App Store, Apple’s testers also test video streaming functionality. Here are the basic requirements to be met:

- For video streaming via cellular channels, HTTP LS is mandatory.

- For multiple bitrate video streams, audio tracks with different bit rates should be identical to avoid artifacts at audio stream switching.

- For the same reason, the aspect ratio of all the streams should be identical.

- One of the streams must be encoded at 64kbps and contain the audio track only.

To see the maximum and recommended video encoding settings in network streaming, please refer to Best Practices for Creating and Deploying HTTP Live Streaming Media for the iPhone and iPad.

* * *

In this post, we have but skimmed the multitude of features available on the iOS platform. In fact, there are much more of them. The number of different combinations of features that could be made using the available frameworks, is countless. However, even in the iOS SDK there are some notable gaps. The open API systems offer no tools for live streaming of media from a device camera and microphone. The applications that can do it, such as Facetime, Skype, Ustream are based on proprietary code that is not available to an average developer. Another gap that is particularly noticeable when working with a professional video is lack of a full-fledged DRM system such as Flash Access (iOS natively supports encrypted content playback, to manage keys it needs third-party libraries, such as, Adobe Access and Widevine). FairPlay, used by Apple to protect its own content, is not available to third-party developers, even on a commercial basis.

In preparing this review, we used the following sources:

- AV Foundation Programming Guide

- AV Foundation Framework Reference

- Presentations and code samples shown at WWDC 2010 and WWDC 2011 (available to registered developers only.)